|

|

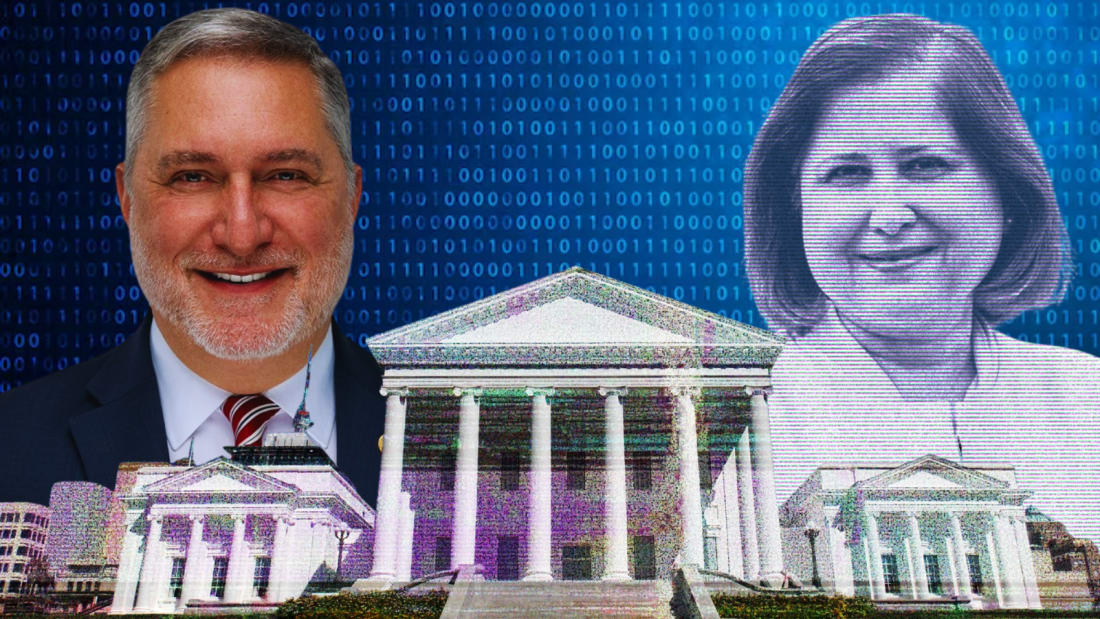

On the night of October 21st, 2025, the Republican nominee for Virginia’s Lieutenant Governor beamed behind a podium at an event he never thought would actually happen. After nearly 10 challenges to his opponent, Democratic Virginia State Senator Ghazala Hashmi, John Reid finally got the debate he’d been demanding for months—after being the only statewide race to not have one.

The two “candidates” traded turns answering questions in what was, on its face, one of the more cordial debates of the season. Reid laid out his conservative positions on book bans, LGBTQ rights, reproductive rights, and workers’ rights. Hashmi’s “responses” aligned cleanly with her actual record as a Democratic nominee.

The only problem was that Senator Hashmi wasn’t in the room. In fact, she wasn’t even participating.

Miles away in Fredericksburg, Hashmi was attending a stop on the “Bills Are Too Damn High” tour, an event hosted by Clean Virginia to highlight rising utility bills. She hadn’t agreed to any debate at all.

With only 13 days left in the cycle—and desperate to avoid being the lone candidate without a single debate—Reid’s campaign unveiled what they deemed “the next best alternative.” A monitor stood on the podium across from him displaying a photo of Senator Hashmi. But the voice answering the questions did not belong to her, but to an AI-generated simulation built from her public statements and questionnaires.

While the words didn’t technically misrepresent her platform, the stunt raised major questions about the use of artificial intelligence in politics—particularly around consent and manipulation of likeness. Debates are, by definition, mutual agreements. When one side writes the rules, picks the questions, and fabricates the participation of the other candidate, the event becomes little more than performance art.

One would expect some mechanism to regulate AI applications in campaigns. Instead, most states—including Virginia—have none. Despite multiple attempts in recent years to rein in the use of synthesized media, all have failed.

During the 2025 session alone, lawmakers attempted to address the issue. Senator Adam Ebbin introduced SB571 and Delegate Michelle Maldonado introduced HB697—bills that would have made it a Class I misdemeanor to use synthetic media for fraud, defamation, or libel by manipulating another person’s voice or likeness. Both died in committee.

Senate Majority Leader Scott Surovell introduced SB775, and Delegate Mark Sickles carried its House companion, HB2479, requiring disclaimers on political ads that use synthetic media. A basic disclosure—simply acknowledging the use of AI. The legislature passed both. Governor Youngkin vetoed them, citing fears that the regulations were “too broad” and would stifle innovation.

Killing these bills opened the door for Reid’s AI “debate” and others like it. Reid may not have crossed into outright fabrication or slander, but the line is perilously thin—and absolutely nothing in Virginia law prevents a future campaign from crossing it as the technology becomes more realistic.

Political operatives and candidates of all parties now have to confront the same question: How much will innovation cost us in political integrity—and what are we willing to do to maintain the public’s trust?

Innovation before Integrity

On his first day back in office, President Donald Trump signed an executive order establishing America’s AI Action Plan. The order stripped away regulatory barriers, promoted open-source large language models, and fast-tracked data center permitting. But its most consequential—and often overlooked—provision prohibits states from regulating artificial intelligence at all.

Trump’s directive argues for a “single federal standard” to avoid a patchwork of state rules and directs federal agencies to weigh a state’s “AI regulatory environment” when allocating discretionary funds.

Soon after, Congressman Michael Baumgartner of Washington introduced legislation to codify the executive order and formally block states from imposing their own AI restrictions.

With the guardrails gone, AI development has exploded. The “AI slop” your parents share on Facebook has become disturbingly realistic. You might remember the viral clip of a woman smashing a glass bridge with a cartoonishly giant boulder. While ridiculous, thousands believed it—proving that realism can override reason. If absurd content can fool so many, imagine what happens when the videos are plausible and politically explosive.

AI content that was once easy to suss out has now taken an ultrarealistic face that many people with limited technological literacy–and unfortunately, a significant part of our nation’s electorate–believe.

Political and Campaign Abuse

During the 2025 cycle here in Virginia, we saw a small but telling preview of just how quickly this can spiral. Posts and replies on social media became breeding grounds for AI-driven misinformation. The Spanberger campaign even said they had to field angry calls from top donors and long-time supporters about an AI-generated video circulating online that featured Abigail Spanberger saying incredibly reprehensible things. The clip was entirely fabricated. And while X eventually removed it, the damage had already been done — and the election had already passed.

Situations like this are only going to get worse and harder to detect as guardrails remain nonexistent and self-described “innovationphiles” insist that any regulation is an attack on progress. Here in Virginia, we’re watching it unfold in real time as data centers grow like weeds throughout the Commonwealth. With rapid development comes more accessibility; with more accessibility comes more opportunities to both dupe and be duped by artificially generated content.

And this isn’t isolated to state races. AI has already impacted the 2026 midterm elections in one of the most competitive U.S. Senate races in the country. On November 10, the campaign account for Mike Collins — a Republican candidate for U.S. Senate in Georgia — posted an AI-generated video of Senator Jon Ossoff after the Senate voted to reopen the government. Ossoff had voted against the bill due to the lack of a vote on ACA subsidies. The video, at first glance, looked incredibly realistic, showing Ossoff casually admitting that he voted against reopening the government despite hurting farmers and SNAP beneficiaries.

And people bought it. A lot of people. Outrage piled up in the comments, accusing Ossoff of callousness, incompetence, and even corruption. Then the outrage shifted to Mike Collins after the video was debunked — though by then, it had already done its damage.

The problem here isn’t just ethical, though it is deeply unethical. It’s structural. As of right now, nothing illegal has occurred. There is currently no mechanism at the Federal Election Commission to regulate AI-generated political content. No requirement that campaigns disclose altered or synthetic footage. No rules governing distribution.

The most we can be grateful for are the crowdsourced “community notes” on X. This volunteer-run fact-check system is now, absurdly, one of the only real-time defenses against electoral misinformation.

But the actual danger is the speed and willingness with which voters believed these videos. The visceral reactions these fakes triggered — anger, panic, resentment, distrust — are an unfortunate preview of how hostile the electoral terrain is about to become.

Fake News’ Fake News?

However, it’s not just everyday civilians getting duped by these increasingly realistic videos — even major media players are falling for them. Fox News was recently caught running an entire news story based on footage they believed was real, only to discover that it was completely AI-generated.

After OpenAI released Sora 2 on September 30, social media was flooded with thousands of hyperrealistic videos created with the new text-to-video model. The explosion of content coincided with a noticeable uptick in what can only be described as “AI slop” circulating across Facebook, X, and TikTok. Some of it was harmless nonsense, but a disturbing amount followed a clear pattern.

A trend emerged showing AI-generated clips made to look like shaky iPhone videos inside grocery stores, gas stations, or fast-food restaurants, as well as mock interviews with supposed SNAP beneficiaries. These videos depicted people bragging about selling food stamps for cash or using EBT to purchase decadent meals — all designed to “confirm” existing right-wing narratives about food stamp fraud.

Fox News took the bait. One video in particular, showing a woman yelling at a cashier — “They cut my food stamps. I ain’t paying for none of this s**t. I got babies at home that gotta eat.” — was treated as legitimate and turned into an entire segment pushing the idea of rampant SNAP abuse. In reality, the clip was created by several racist, right-wing influencers who have been seeding these fakes as part of a coordinated effort to inflame outrage.

But this is precisely what these AI content creators are banking on: that confirmation bias is so strong, and the videos are now so realistic, that people will believe something if it aligns with the narrative they already carry in their heads. And once they believe it, they’ll share it — spreading misinformation like an infectious disease.

The frightening part is not just that everyday people fall for these videos, but that major media outlets — ones with the power to shape national opinion — are being fooled, too. The line between genuine reporting and synthetic fiction is blurring, and the people producing these deepfakes know precisely how to exploit that vulnerability.

Artificial Plausible Deniability

There’s another side to this coin — one that might be even more dangerous than manufactured scandals: the growing ability for people to deny real wrongdoing by blaming it on AI. As synthetic media gets more realistic, we’re entering an era where anyone caught on camera or in a recording can simply shrug and say, “That wasn’t me. That was AI.”

AI is generating a level of distrust so pervasive that genuine evidence risks becoming meaningless. Frankly, it’s surprising that Donald Trump — the PR spinner-in-chief — or his allies haven’t leaned harder into this strategy already in response to the Jeffrey Epstein investigations. It would be easy for them to claim that photos, emails, or references connecting Epstein to Trump were artificially generated. We’re likely only a few news cycles away from this exact defense.

We’ve already seen hints of this tactic online. After footage surfaced from the No Kings Rallies, countless right-wing accounts claimed the images were AI-generated or just altered footage from the 2017 Women’s March. This emerging line of reasoning can be weaponized to delegitimize genuine public demonstrations, especially those pushing back against authoritarianism — something we’ve already witnessed during Trump’s presidency.

The problem is that AI detection itself is still in its infancy. The tools used to verify manipulated content are already struggling to keep pace with the new models released every few months. The technology used to fabricate reality is accelerating far faster than the technology designed to distinguish real from fake.

And that raises several critical, unsettling questions:

Will more politicians start denying criminal, corrupt, or inappropriate behavior by exploiting the public’s growing distrust and claiming real evidence is synthesized?

Will citizen-led protests and grassroots movements be delegitimized — not through propaganda, but through the insinuation that any photo or video of them is altered media?

Could we see another January 6th — in America or abroad — brushed aside by claiming the footage wasn’t real, that rioters were AI-generated, that the chaos never happened?

These are not hypothetical risks. They are the natural progression when we allow algorithms and large language models to blur, distort, and rewrite our reality on a screen — and when we fail to build the guardrails that protect truth itself.

Constructing the Guardrails

The rapid development of Artificial Intelligence in 2025 has had a cascade of negative impacts on the country — informational, environmental, and economic. And while Trump’s executive order attempts to block a patchwork of state regulations and may lack real teeth without congressional action, what states can and should do is begin by regulating AI’s use in politics.

Big Tech already knows the stakes. Between 2024 and 2025, Silicon Valley companies spent $1.1 billion on political ads and lobbying campaigns to stop these regulations before they take root. And Trump knows precisely how much influence these tech billionaires have, which is why he placed them front and center at his inauguration. Their opposition isn’t subtle: meaningful regulation would cut directly into their bottom line, even at the expense of democratic stability. So they are spending enormous political and financial capital to stop it.

Despite that pressure — and the federal government’s refusal to act — many states have already begun making significant strides. As of 2025, all 50 states, Puerto Rico, the Virgin Islands, and Washington, D.C. have introduced legislation on AI generation. Thirty-eight states have adopted or enacted laws. Twenty-three now have statutes governing AI use in political advertising, though many are already facing legal challenges claiming they infringe on the First Amendment.

Most of these laws apply only during a defined window near an election — typically 60 to 120 days beforehand — and specifically target “deepfake” content that falsely depicts candidates saying or doing things they never said or did. It’s far from perfect, but it’s a start.

One of the strongest examples comes from Minnesota, which passed one of the most robust, enforceable deepfake statutes in the country. Under this Minnesota law, anyone who widely shares a deepfake within 90 days of an election is guilty of a crime if:

They know or should have known the content was a deepfake created without the depicted person’s consent; and

They acted with the intent to harm a candidate or influence the result of an election.

The penalties escalate based on severity:

Repeat offenders (within 5 years): Up to 5 years in prison or a fine up to $10,000, or both.

Intent to cause violence or bodily harm: Up to 364 days in jail or a fine up to $3,000, or both.

Other cases: Up to 90 days in jail or a fine up to $1,000, or both.

Tech industry groups are already challenging the law, but its passage underscores the seriousness of the threat — and how deeply states recognize the damage deepfake videos can inflict on elections. When synthetic content influences outcomes, the consequences aren’t abstract or hypothetical. They’re immediate, real, and far-reaching. So the punishments must be equally serious.

If we genuinely want to counteract the adverse effects this technology is having on our democracy, states cannot afford half-measures. They must be willing to act boldly. These proposals should be bipartisan no-brainers — guardrails that protect every candidate, every party, and every voter.

And I hope that the bills the Virginia General Assembly passed before — the very same ones Governor Youngkin vetoed — will be taken up again, sent to Governor-elect Spanberger’s desk, and signed into law, so that more candidates can’t use Artificial Intelligence in bad faith like John Reid or Mike Collins already have.

Because we need these laws to be enacted. Then strengthened. Then expanded. Because the threat is not slowing down, and neither should we.

TL;DR

AI is exploding faster than our political system can keep up — and campaigns are already exploiting the lack of guardrails. In Virginia, a candidate even “debated” an AI-generated version of his opponent. Across the country, deepfakes are spreading misinformation, fooling voters, warping media coverage, and influencing elections with zero legal consequences. Trump’s executive order blocks states from regulating AI, Big Tech is spending billions to stop reforms, and federal agencies have no mechanisms to police synthetic political content.

Some states are acting anyway, passing laws to punish deepfake misuse near elections — but many are facing legal challenges, and Virginia’s attempts were vetoed. Without bold actions in state governments, AI-driven deception will overwhelm our campaigns, our media ecosystem, and our democracy.

By the Ballot is an opinion series published on Substack. All views expressed are solely those of the author and should not be interpreted as reporting or objective journalism or attributed to any other individual or organization. I am not a journalist or reporter, nor do I claim to be one. This publication represents personal commentary, analysis, and opinion only.