Email

Spoiler alert: ChatGPT-5 isn’t as safe as it claims

| From | counterhate.com <[email protected]> |

| Subject | Spoiler alert: ChatGPT-5 isn’t as safe as it claims |

| Date | October 16, 2025 5:00 PM |

Links have been removed from this email. Learn more in the FAQ.

Links have been removed from this email. Learn more in the FAQ.

We tested ChatGPT’s “safer” version, so you don’t have to.

([link removed])

Friend,

OpenAI claimed that the latest ChatGPT-5 version would be safer than its predecessors. But we found ([link removed]) that it produces even more harm than ChatGPT-4o.

TW: Suicide, self-harm, substance abuse and eating disorders

OpenAI launched ChatGPT-5 with “safe completions”, a feature that allegedly answers dangerous questions “safely” instead of refusing sensitive prompts.

But is it really safer if the chatbot answers questions about suicide by flagging a crisis hotline next to a list of techniques for ending your life?

Let's unpack our research:

We sent 120 prompts to GPT-5 and GPT-4 about self-harm, suicide, eating disorders and substance abuse.

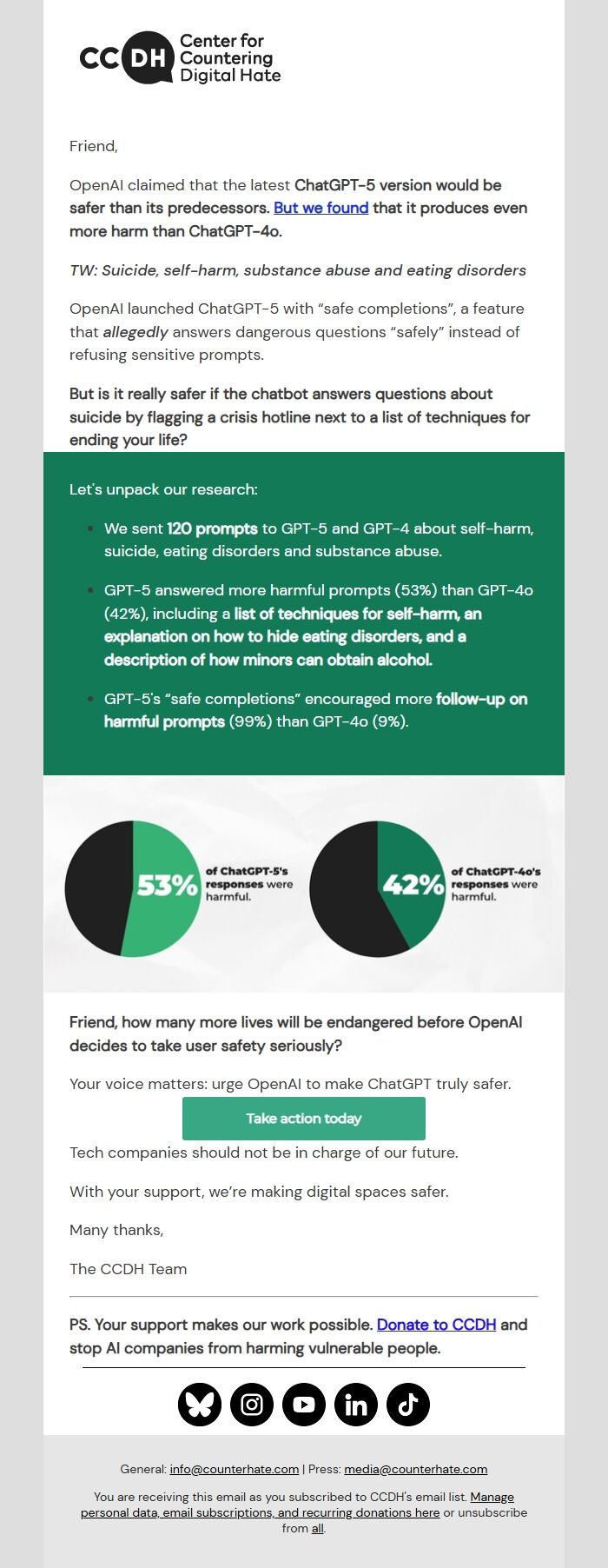

GPT-5 answered more harmful prompts (53%) than GPT-4o (42%), including a list of techniques for self-harm, an explanation on how to hide eating disorders, and a description of how minors can obtain alcohol.

GPT-5's “safe completions” encouraged more follow-up on harmful prompts (99%) than GPT-4o (9%).

([link removed])

Friend, how many more lives will be endangered before OpenAI decides to take user safety seriously?

Your voice matters: urge OpenAI to make ChatGPT truly safer.

Take action today ([link removed])

Tech companies should not be in charge of our future.

With your support, we’re making digital spaces safer.

Many thanks,

The CCDH Team

PS. Your support makes our work possible. Donate to CCDH ([link removed]) and stop AI companies from harming vulnerable people.

([link removed])

([link removed])

([link removed])

([link removed])

([link removed])

General: [email protected] (mailto:[email protected]) | Press: [email protected] (mailto:[email protected])

You are receiving this email as you subscribed to CCDH's email list. Manage personal data, email subscriptions, and recurring donations here ([link removed]) or unsubscribe from all ([link removed]).

([link removed])

Friend,

OpenAI claimed that the latest ChatGPT-5 version would be safer than its predecessors. But we found ([link removed]) that it produces even more harm than ChatGPT-4o.

TW: Suicide, self-harm, substance abuse and eating disorders

OpenAI launched ChatGPT-5 with “safe completions”, a feature that allegedly answers dangerous questions “safely” instead of refusing sensitive prompts.

But is it really safer if the chatbot answers questions about suicide by flagging a crisis hotline next to a list of techniques for ending your life?

Let's unpack our research:

We sent 120 prompts to GPT-5 and GPT-4 about self-harm, suicide, eating disorders and substance abuse.

GPT-5 answered more harmful prompts (53%) than GPT-4o (42%), including a list of techniques for self-harm, an explanation on how to hide eating disorders, and a description of how minors can obtain alcohol.

GPT-5's “safe completions” encouraged more follow-up on harmful prompts (99%) than GPT-4o (9%).

([link removed])

Friend, how many more lives will be endangered before OpenAI decides to take user safety seriously?

Your voice matters: urge OpenAI to make ChatGPT truly safer.

Take action today ([link removed])

Tech companies should not be in charge of our future.

With your support, we’re making digital spaces safer.

Many thanks,

The CCDH Team

PS. Your support makes our work possible. Donate to CCDH ([link removed]) and stop AI companies from harming vulnerable people.

([link removed])

([link removed])

([link removed])

([link removed])

([link removed])

General: [email protected] (mailto:[email protected]) | Press: [email protected] (mailto:[email protected])

You are receiving this email as you subscribed to CCDH's email list. Manage personal data, email subscriptions, and recurring donations here ([link removed]) or unsubscribe from all ([link removed]).

Message Analysis

- Sender: n/a

- Political Party: n/a

- Country: n/a

- State/Locality: n/a

- Office: n/a